I've been playing around with ChatGPT. I'm simultaneously impressed by its uncanny ability to appear human, and completely underwhelmed when you start probing deeper.

I think, firstly, it's important to note that web search as a whole is ripe for disruption. The state of the web has been getting steadily worse for the last few years, and it's pretty much entirely Google's fault. Google's ranking metrics encourage web content to be needlessly verbose and Google is increasingly pushing mass produced spammy, generic content over individual and generally more interesting publishers. ChatGPT has the potential to pull the rug from under Google, and that's not just because ChatGPT is impressive (which it definitely is, but also, at the same time, sort of isn't) - it's also because Google isn't doing a good job of maintaining their search engine.

Let's see how ChatGPT performs...

Programming

In terms of code, I can ask for basic boilerplate code and get it in reams. For example if I ask for a function to read standard input line by line, I get a while loop that reads stdin line by line.. If I ask for a retry loop to handle database deadlocks, I get a for loop and an exception handler. All syntactically valid. That's really impressive! I've seen people throwing leetcode style interview questions into it and getting back valid solutions, but that style of question lends itself very easily to regurgitation without much creative thought, whereas programming in general does not.

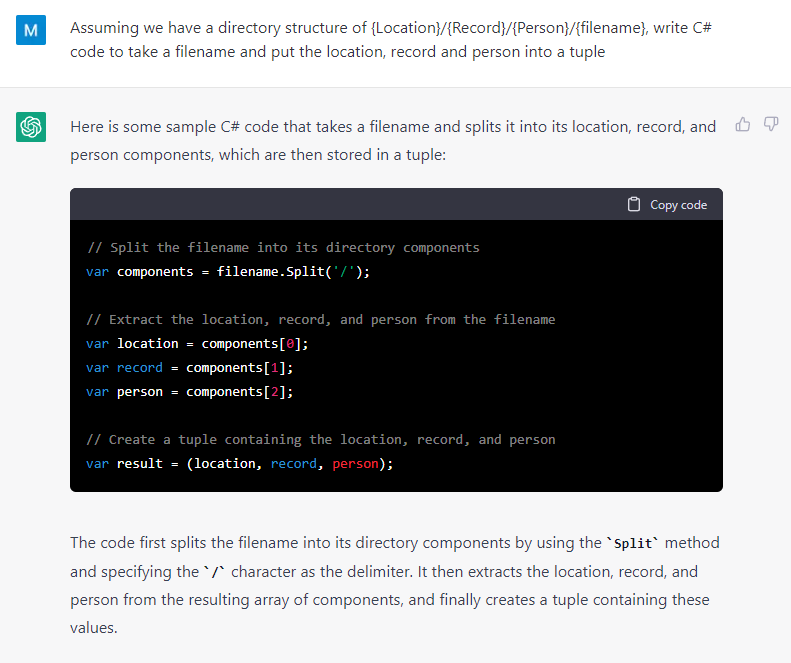

Let's try something a lot simpler than the average leetcode question, but a bit more bespoke:

It pretty much did what I asked for. On the other hand, what it's given me isn't really that useful or applicable to real life. A good human written solution to this would be to use the Path.GetDirectoryName() and Path.GetFileName() methods, which would handle real life paths properly, and to realise that the filename comes at the end of the path, so it's the directories directly above it that matter - not the ones at the beginning. Let's be more specific...

It's interesting that it's now discovered the path parsing methods, but: 1. the repeated calls are pretty horrible, 2. I didn't ask for anything to do with the filename or extension, and 3. the information I did ask for is wrong. Despite its assertion, it actually gives:

location = "some"

unit = "location"

person = "unit"

filename = "filename"

extension = ".extension"

It's trying, but it's confused.

Let's try something else.

I asked this question because running Windows specific functionality on NodeJS is an unusual thing to want to do and probably isn't something it's seen verbatim on a blog somewhere, though it probably has seen the component parts in isolation in its training data.

Shelling out to net view is the right approach for this, and it's impressive that it knows this. The attempt to parse the output is totally wrong, but it's actually done something really interesting here. net view on its own will (or used to, before Microsoft disabled it for security reasons) print out a list of servers on the domain, one per line, prefixed with \\. That's exactly what the generated code is parsing.

This is interesting because it's confused two separate things (listing shares vs listing servers) which are both linked by another concept (the net view command).

It's also worth noting that it's splitting lines by unix style '\n' line endings instead of '\r\n' Windows endings, so split lines would have a trailing '\r', although this doesn't actually affect the correctness of the program in this case.

In summary it's completely wrong, but you can kind of see the high level outline of how it works and what it's doing.

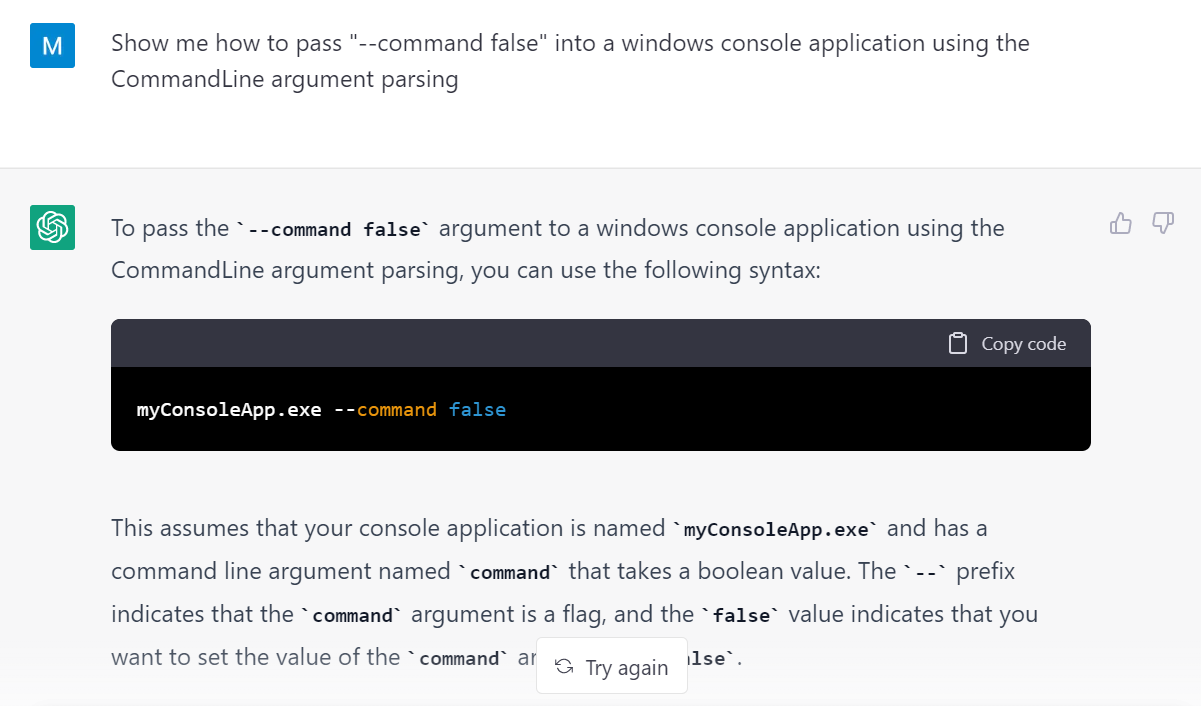

Recently I had to figure out why passing --command false onto a C# command line program was populating the argument in the code with true. It turns out that the argument declaration needs to be a nullable boolean, i.e. public bool? Command { get; set; }, otherwise its mere presence is treated as a true value, even if the value is 'false'. Why? I don't know, but that's how it is, and it's the kind of unintuitive detail I go to StackOverflow for. I wanted to see if ChatGPT would have fared better than Google and StackOverflow.

Hmm, that's not what I meant, but I could have been more specific. After a bit of coaxing it gave me this:

The code looks plausible to the eye, but the compiler is not so easily convinced. ParseArguments(args, options) isn't a valid call. This doesn't compile, and even if it did, it still hasn't answered my question. Overall: not helpful in the slightest.

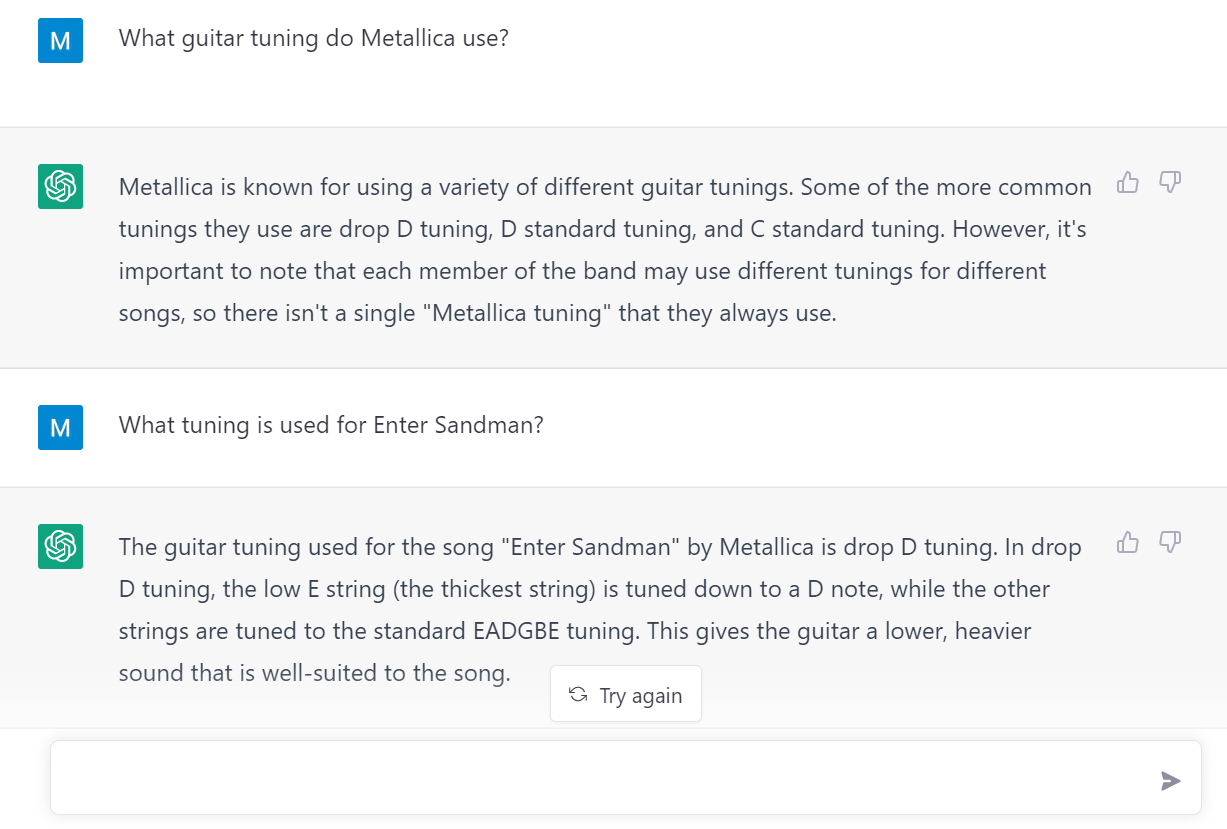

Music knowledge

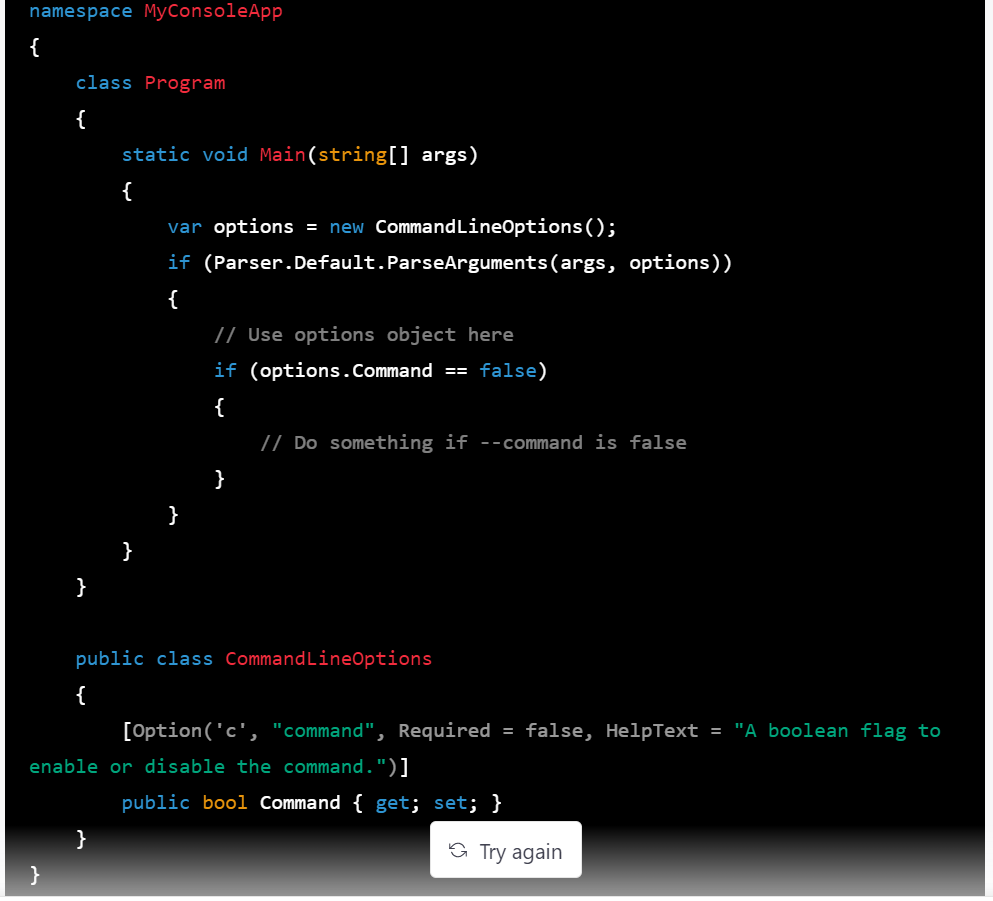

Change of topic. I'm sure there's plenty of discussion in its training set about the world's most famous heavy metal band, let's see what it knows.

Well, not really. Metallica tend to tune to Eb live, but beyond that their tuning is best described in terms of album. Early and modern albums are in E standard, with a few Eb albums in the 90s and St Anger in drop C. Occasionally individual songs are in something different but they are the exception. They've actually only used drop D for two songs and Enter Sandman isn't one of them. It's using all the right terminology, but obviously hasn't understand any of it. This answer is pretty much nonsense.

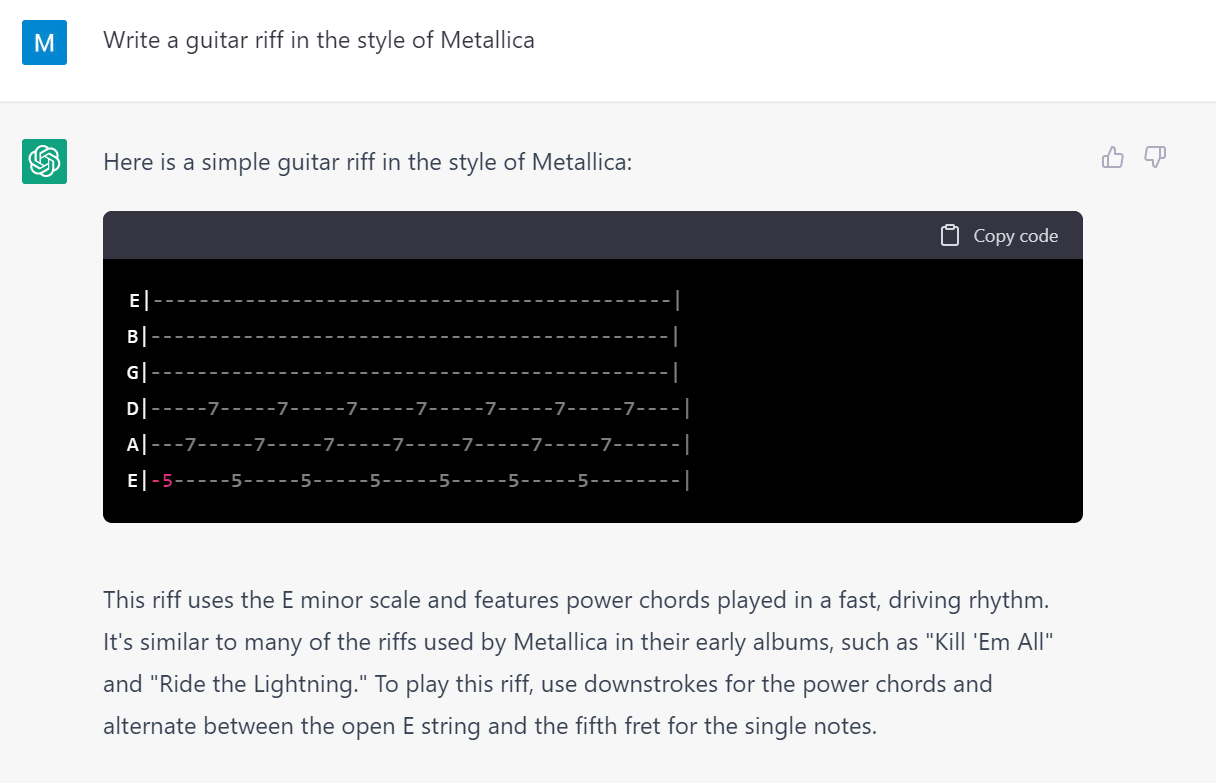

Or how about testing its creative skills:

It's fascinating that it understood the question well enough to generate guitar tablature! But beyond that, contrary to its explanation, this riff is not in the key of Em (it's A), it doesn't use power chords, it's not really similar to their style on early albums and it doesn't even use the open E string. As for the riff: Well, it's a piece of music. But I don't think it's going to replace James Hetfield any time soon.

It's a recurring theme that it will generate guitar tablature while giving explanations that bear no real resemblance:

Hmmm... 🤔

Thoughts

I'm really impressed that it generates valid code, probably even more so than I am that it generates English text (I'm not remotely impressed by the music it writes though). But it's pretty obvious that it doesn't know what it's talking about, and it undermines itself a bit by appearing completely confident while talking nonsense, which means you can only really use while supervising it very closely.

I wonder if its apparent inability to gauge its own level of knowledge is an inherent problem because it really doesn't understand anything and is therefore unable of reasoning about its own blind spots, or whether it's something that could improve in future.

I think that for programming it's a pretty long way off being able to reliably generate code that does what you want. It can generate code very quickly, but that's not always a good thing. We've all worked with someone we wished would write less code...

But a lot of programming is asking Google questions about the APIs you're using, and it does seem like it could shorten the "Hmm" -> Google -> StackOverflow -> "Oh!" process.

But if something like this ends up displacing Google or StackOverflow, another question arises: StackOverflow is constantly transcribing new knowledge, which is used to train systems like ChatGPT (either directly, or indirectly by being able to see code that people wrote with the help of StackOverflow). If people stop using StackOverflow in favour of ChatGPT, that knowledge ceases being written down and that presents a problem for training these systems to learn about new technologies in future.

Another point is that StackOverflow's value (for me) is rarely that it is able to put code on my screen. The real insight is in the discussion around it, which can make you aware of things you didn't know or just get you thinking about alternative ideas.